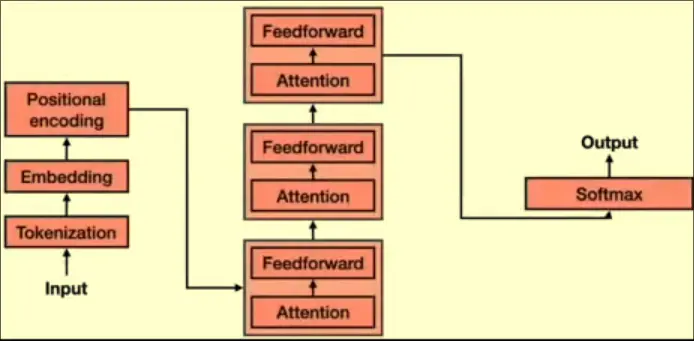

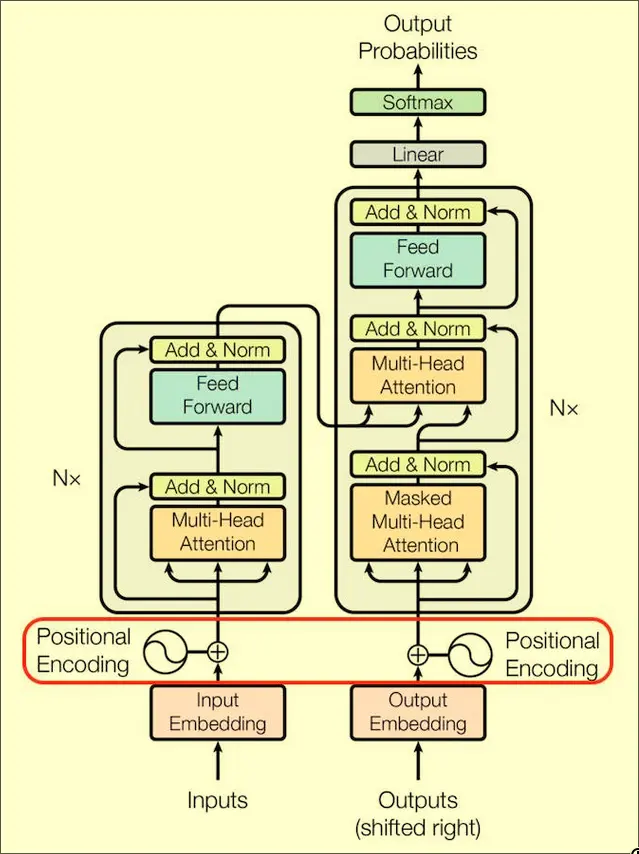

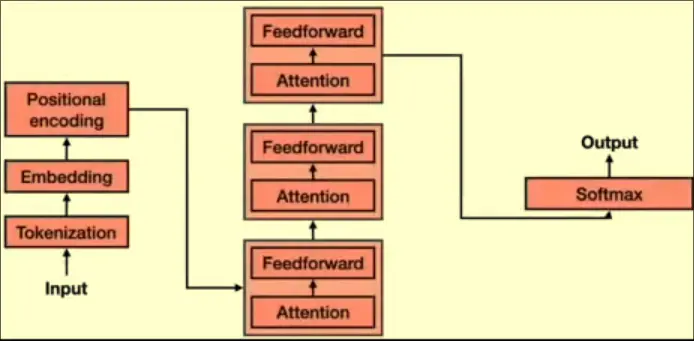

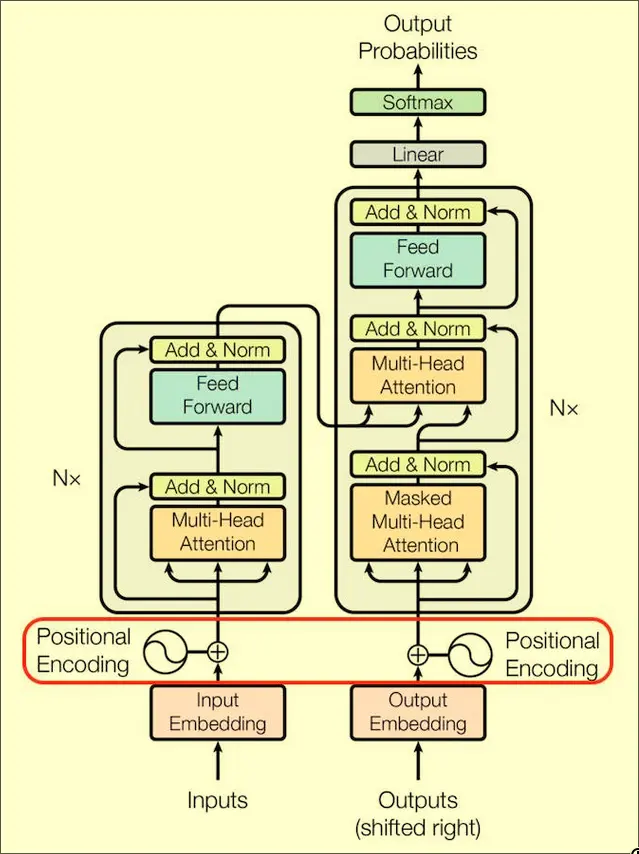

A form of Generative Models that exist to take an input token, and generate a distribution of output tokens that can be repeatedly sampled to continue generation.

Structure

A form of Generative Models that exist to take an input token, and generate a distribution of output tokens that can be repeatedly sampled to continue generation.