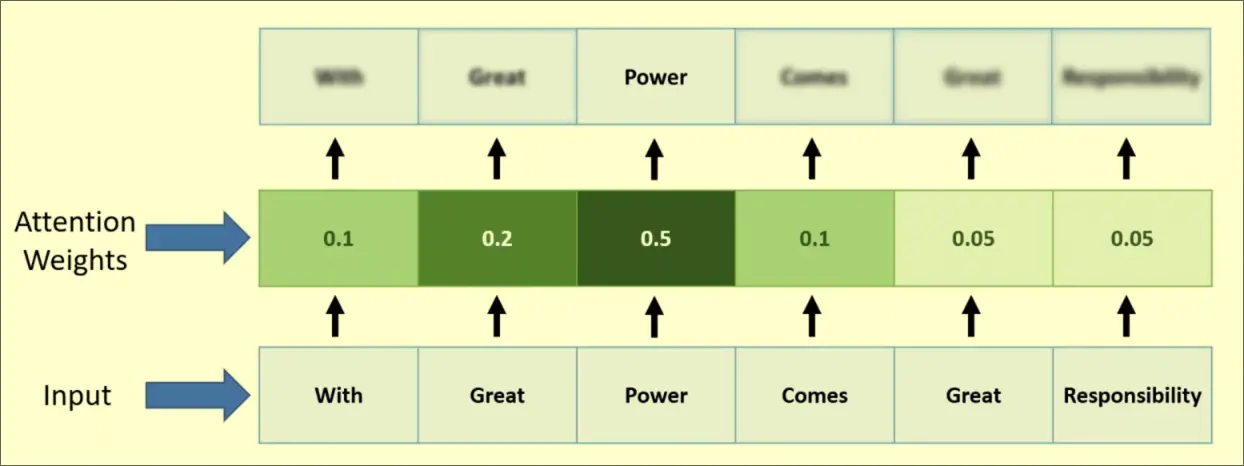

This is a layer for Transformer used to assign attention weights to specific inputs.

Intuition

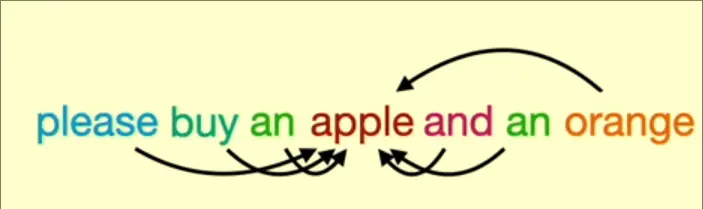

A ‘gravity’ is applied to all words to pull to eachother, allowing each word to be affected by the meaning of every other word

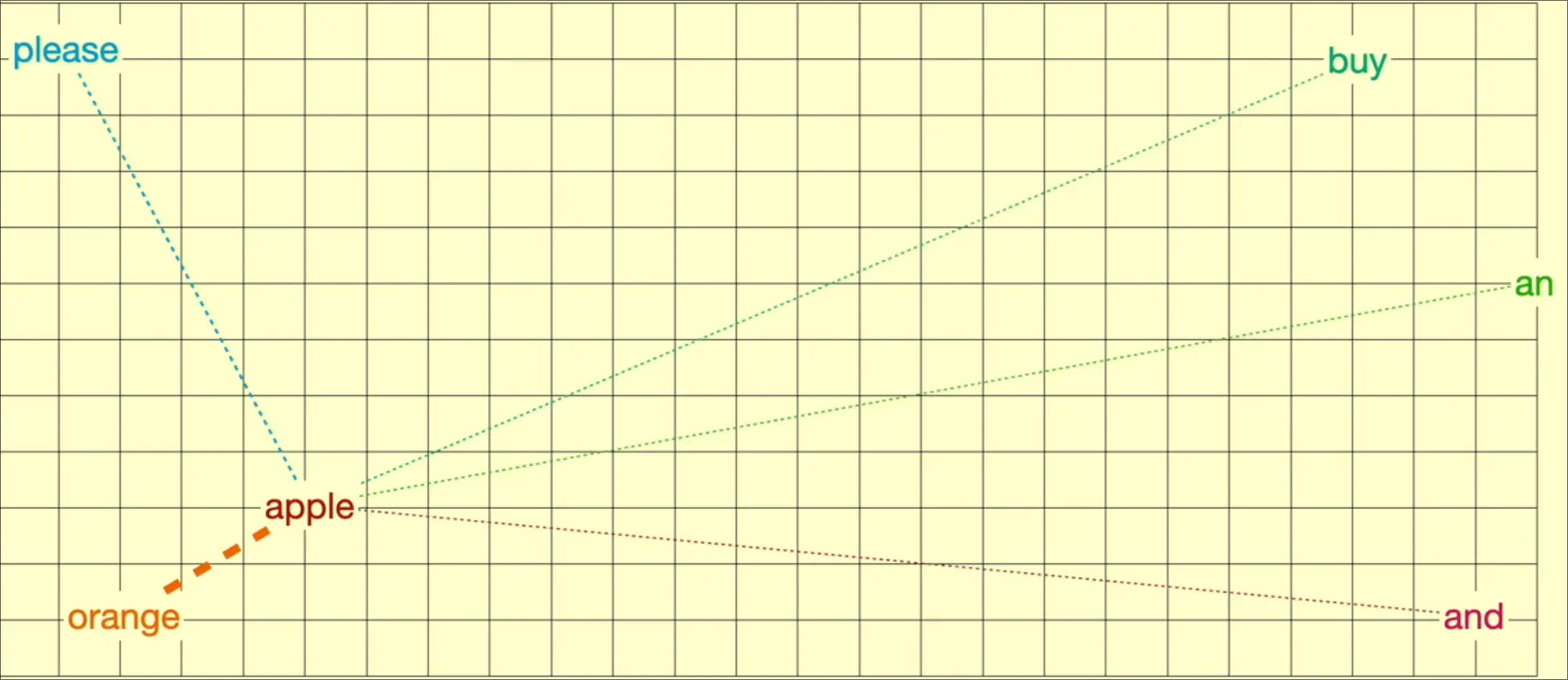

Then, the embedding is modified after pull to be closer to the actual meaning

Then, the embedding is modified after pull to be closer to the actual meaning

Definition

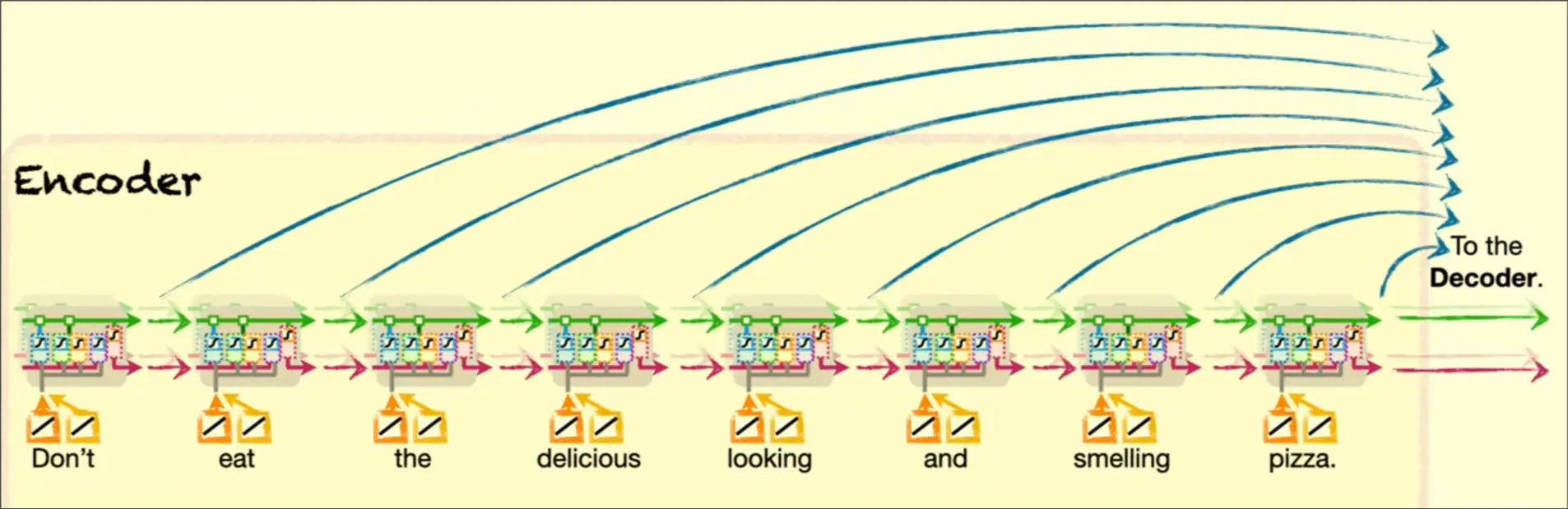

Comprised of several heads that correspond to a current token within a sequence.

Every head send’s their value in Parallel to the Feature Decoder.