This is the algorithm that adjusts the Weights and Biases from a given Loss Function of a neural network. Back Propagation will adjust the corresponding weights from gradient descent.

Process

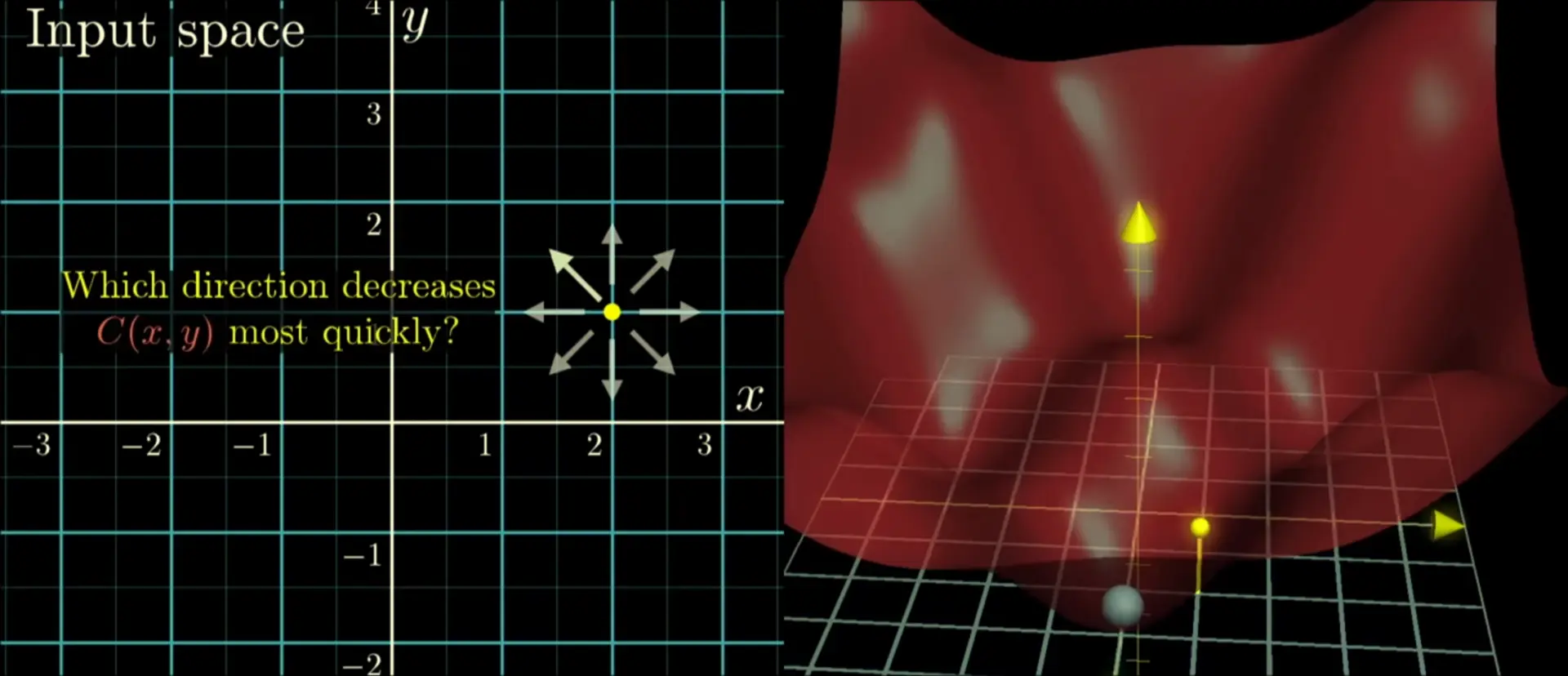

- From a given loss function, move in the direction of the negative Gradient (which has the highest slope)

- Repeat until you are at a local minimum.