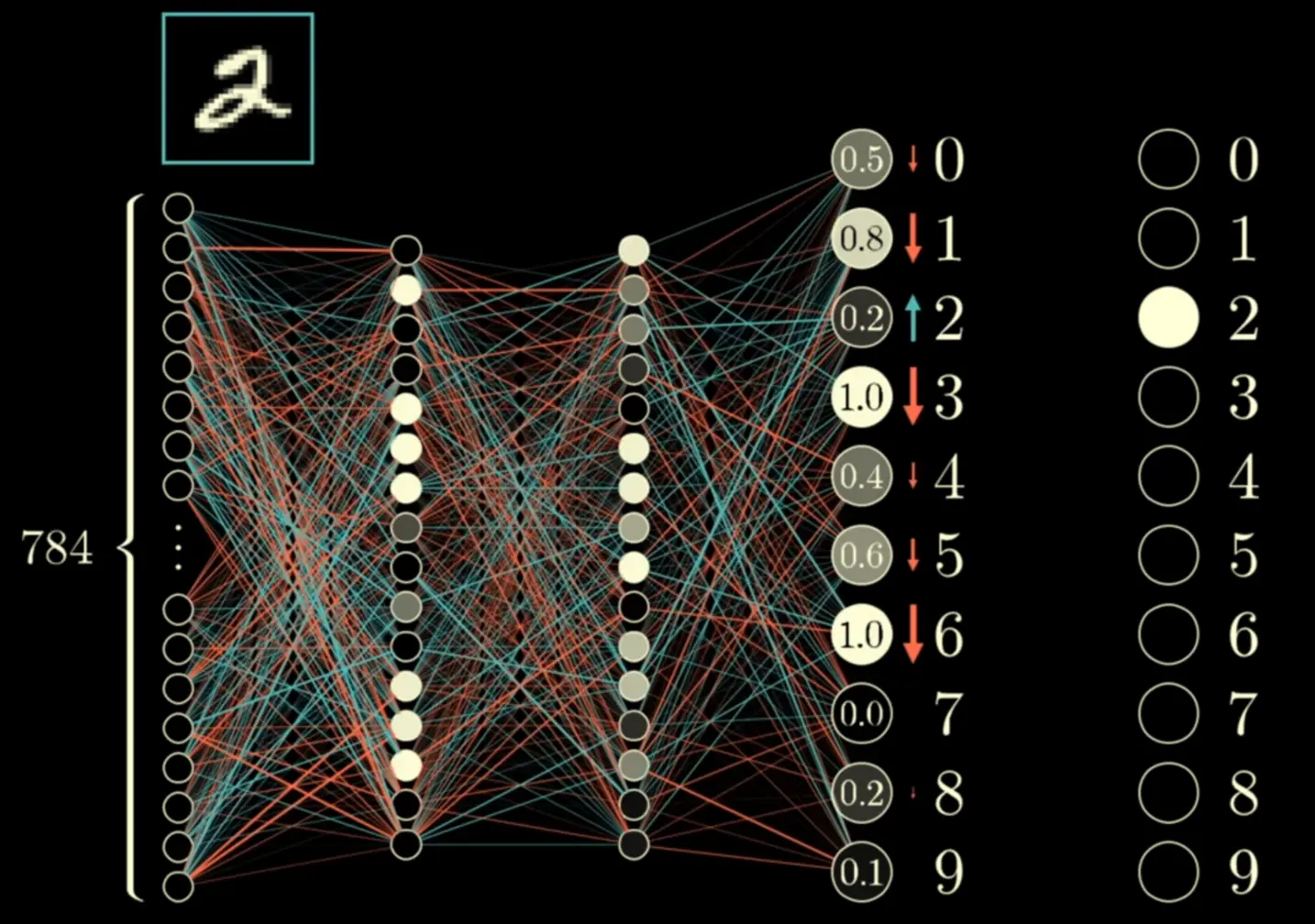

The method to revise the Weights and Biases of a Neural Net. Back propogation is performed uniformly from all input types so that one category does not affect the weights/biases too much.

Process

- Find the expected activation for each neuron, compared to the actual activation, and store the value of the difference.

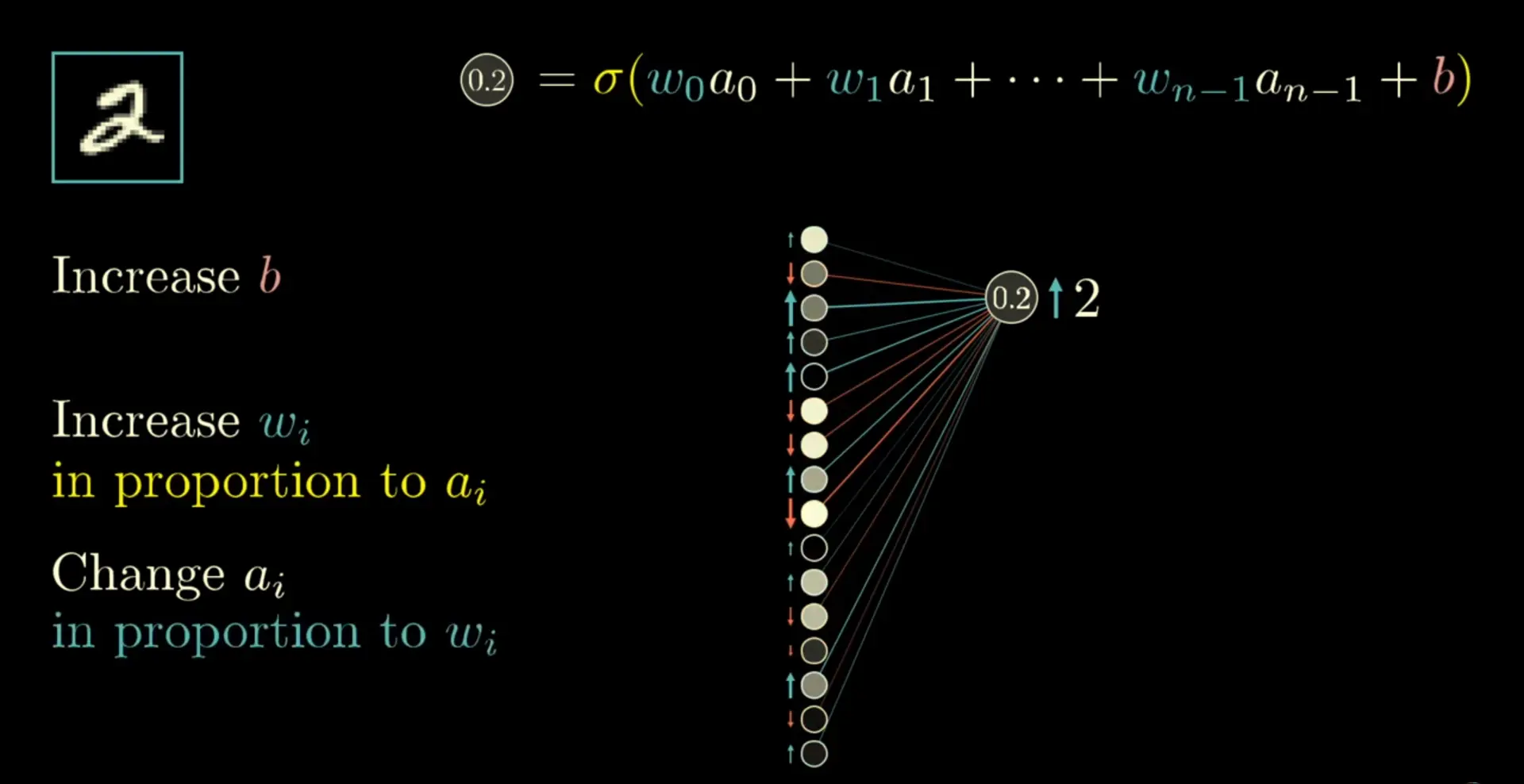

- To modify the activation, you can either:

- Change the bias of the current neuron, in proportion to

- Change the weights of the previous neurons with respect to the activation of those previous neurons

- Change the activation of the last neurons with respect to the weights of those previous neurons (repeat this process for them)