This is a method for securing Generative AI.

Involves creating a second model that summarizes important layers in the Neural Net, then comparing the shapes of the inputs for each layer, and removing outliers.

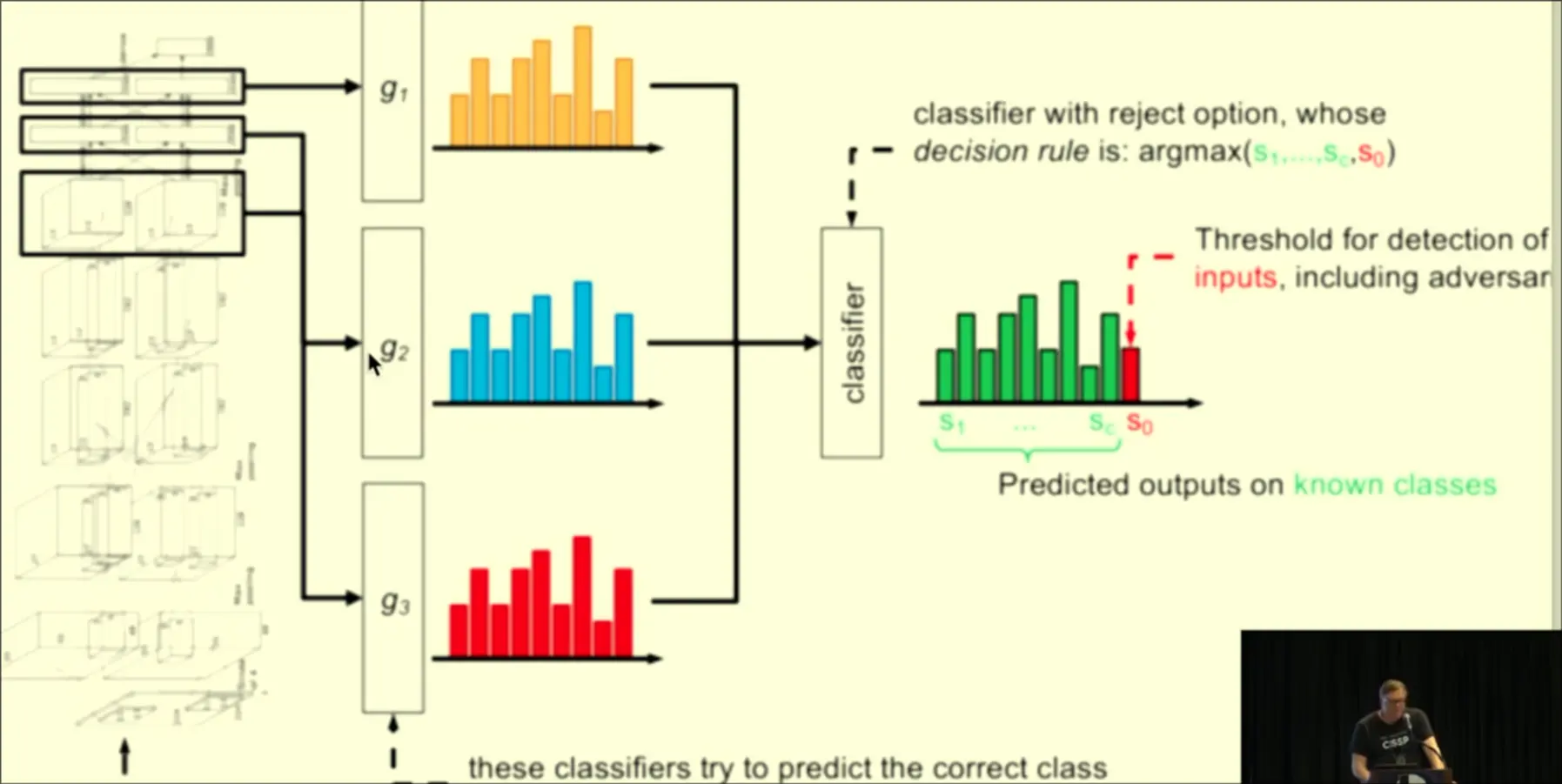

Process

- Train your initial model

- Find inputs that badly mess up the outputs of the model

- Apply DNR wrapper to the model, for it to be able to detect outlier inputs and refuse to evaluate them. You give it the option to say “I don-‘t know”