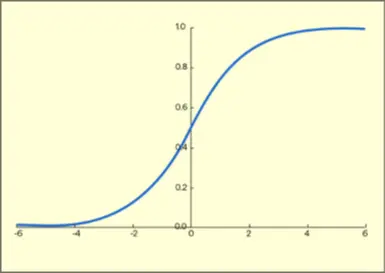

A Activation Function.

Commonly used during output Layers of a Neural Net to normalize all values to , and ensure all elements in the output add up to 1.

Commonly used during output Layers of a Neural Net to normalize all values to , and ensure all elements in the output add up to 1.

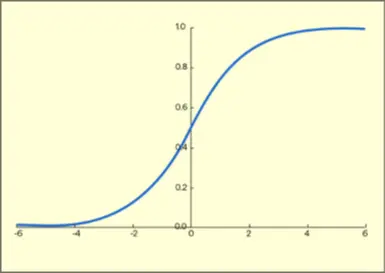

A Activation Function.

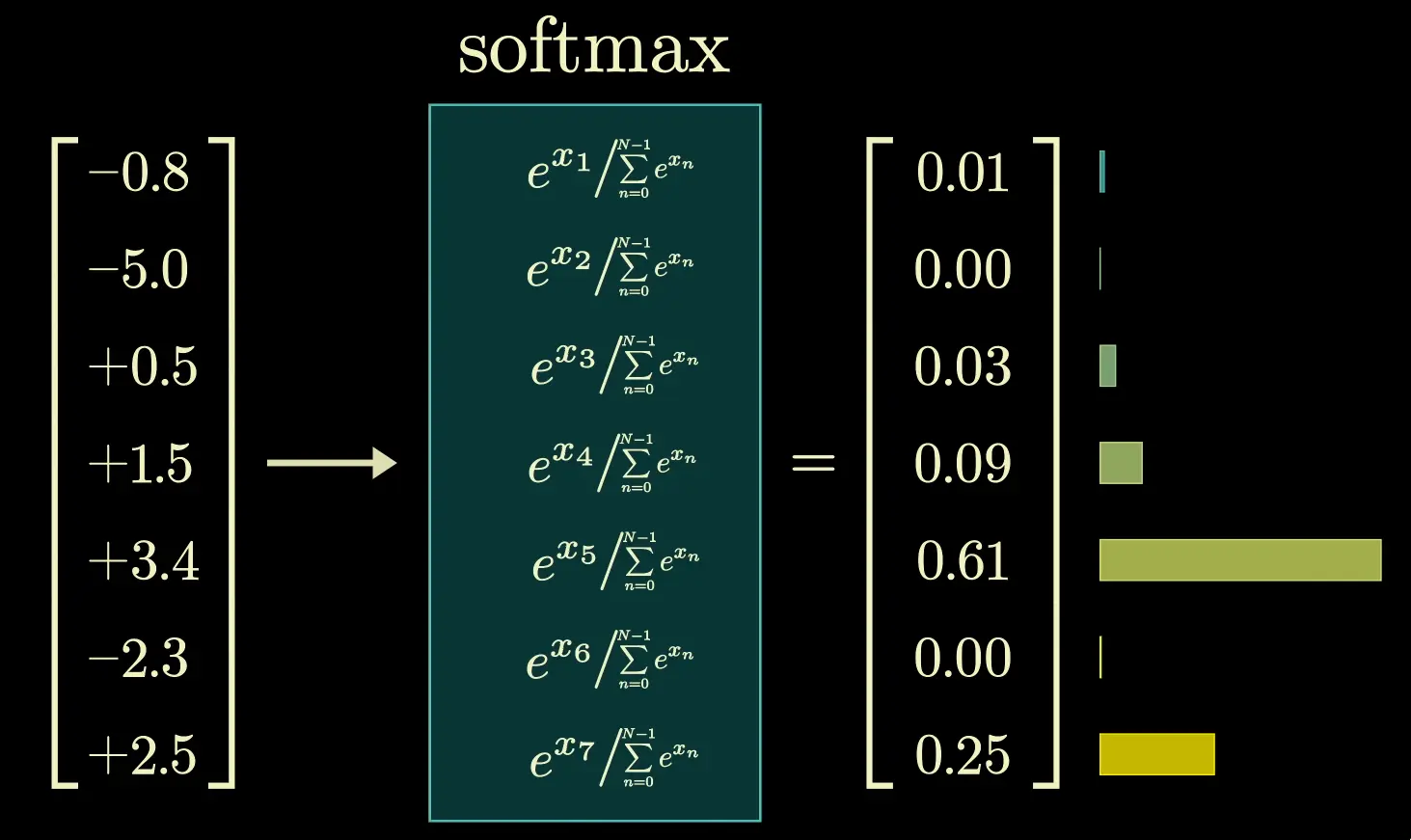

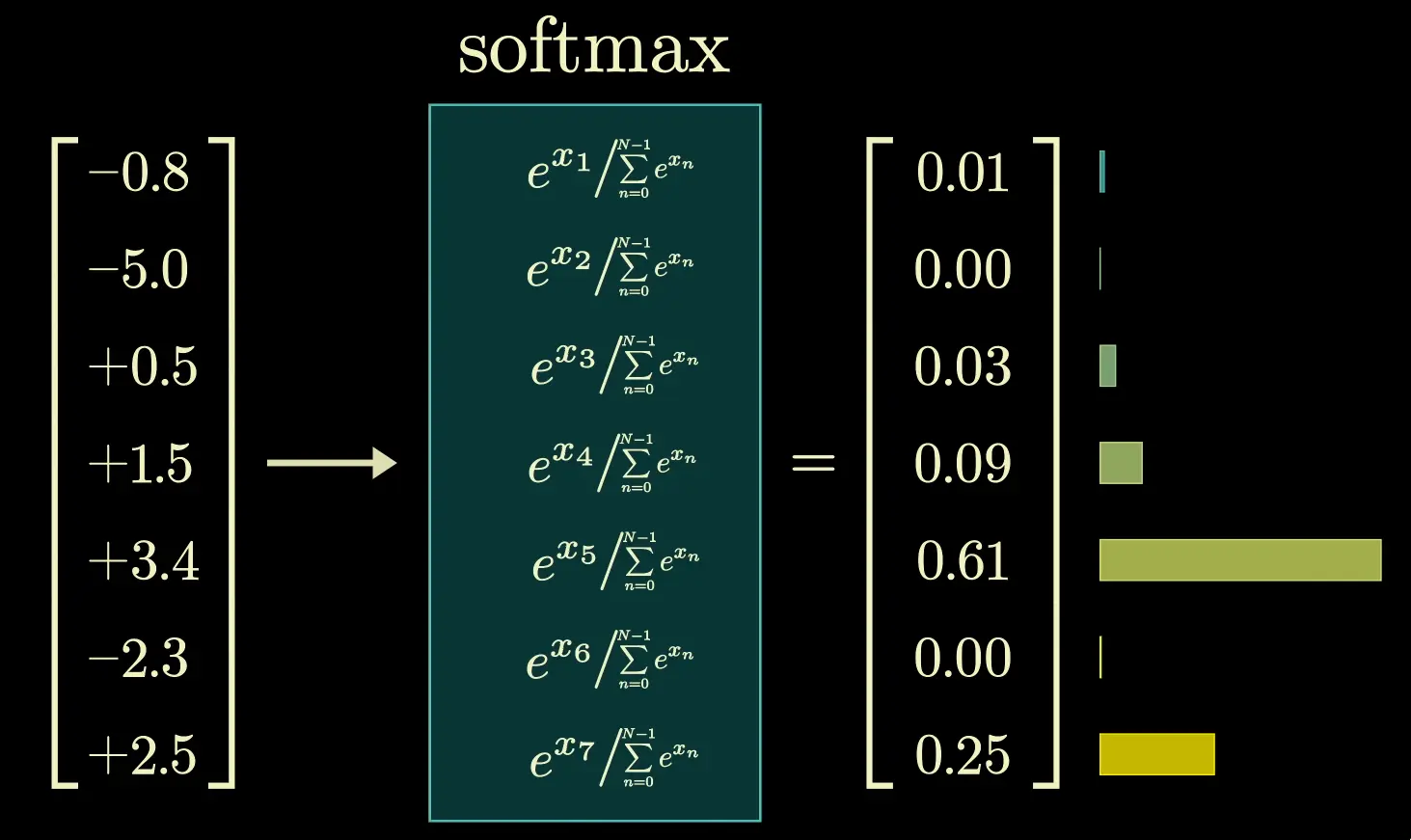

softmax(z)i=∑i=1nejzezi

Commonly used during output Layers of a Neural Net to normalize all values to [0,1], and ensure all elements in the output add up to 1.

Commonly used during output Layers of a Neural Net to normalize all values to [0,1], and ensure all elements in the output add up to 1.