Antropic Defn

- Input text is Tokenized. Often through BPE.

- Tokenized input then Positional Encoding

- The Encoder will distill the input into its essential features. Often through One Hot Encoding

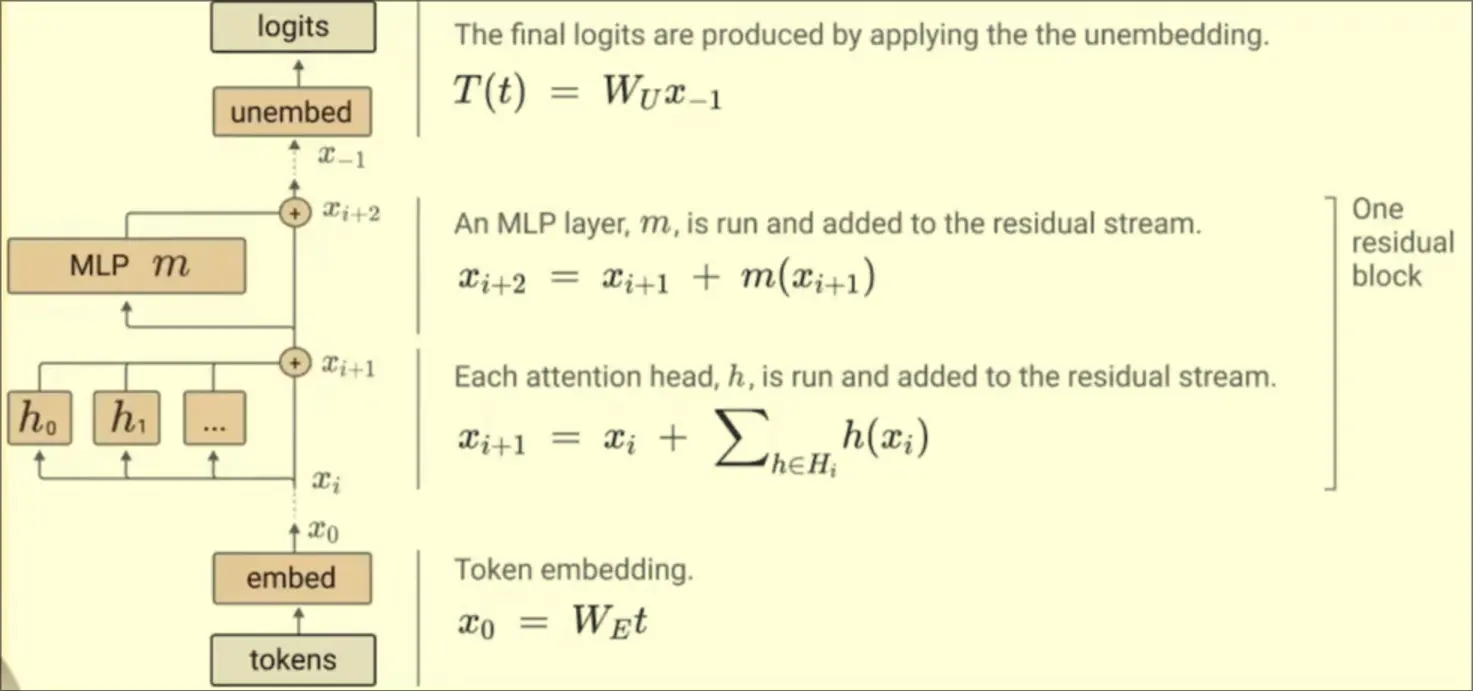

- The encoded text is Embedded through a Look Up Table Matrix

- The encoded text is sent into the Residual Stream

- Each layer in the stream is stored as a Transformer Block (Which includes an Attention Layer, )

- The token is picked and the model continues through Causal Attention and Self Attention Layer. This processing will occur in paralell with other sequences

- The Decoder will expand the outputs into generative data